Benchmarks on AI models share your results! amd ,nvidia, Intel ,apple, NPU`s and cpu only

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

Benchmarks on AI models share your results! amd ,nvidia, Intel ,apple, NPU`s and cpu only

Benchmarks on AI models share your results! amd ,nvidia, Intel ,apple, NPU`s and cpu only

Let`s test AI models on diffrent hardware !

Join our telegram group if you wana chat or have specific questions:

https://t.me/+h2K5CX5jEZA0MWJk

youtu.be/A5A-aP_5B5s

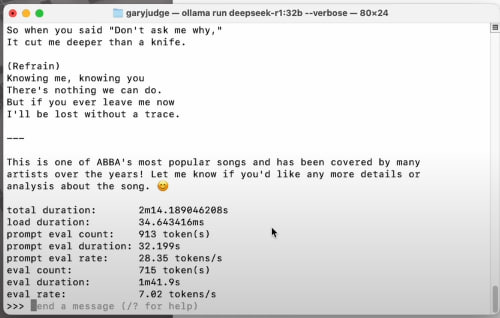

mac mini M4 24GB of ram model 6-7 t/s on deepseek 32B model )

)

Join our telegram group if you wana chat or have specific questions:

https://t.me/+h2K5CX5jEZA0MWJk

youtu.be/A5A-aP_5B5s

mac mini M4 24GB of ram model 6-7 t/s on deepseek 32B model

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

Re: Benchmarks on AI models share your results! amd ,nvidia, Intel ,apple, NPU`s and cpu only

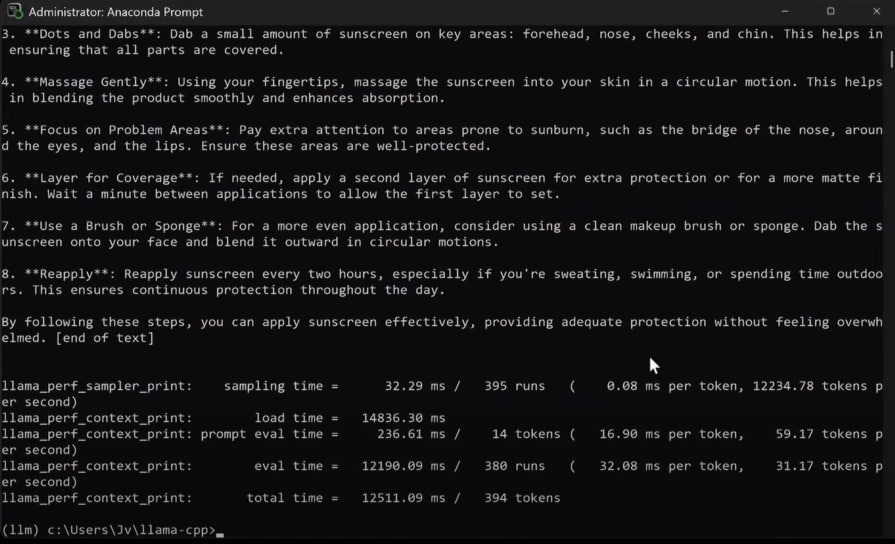

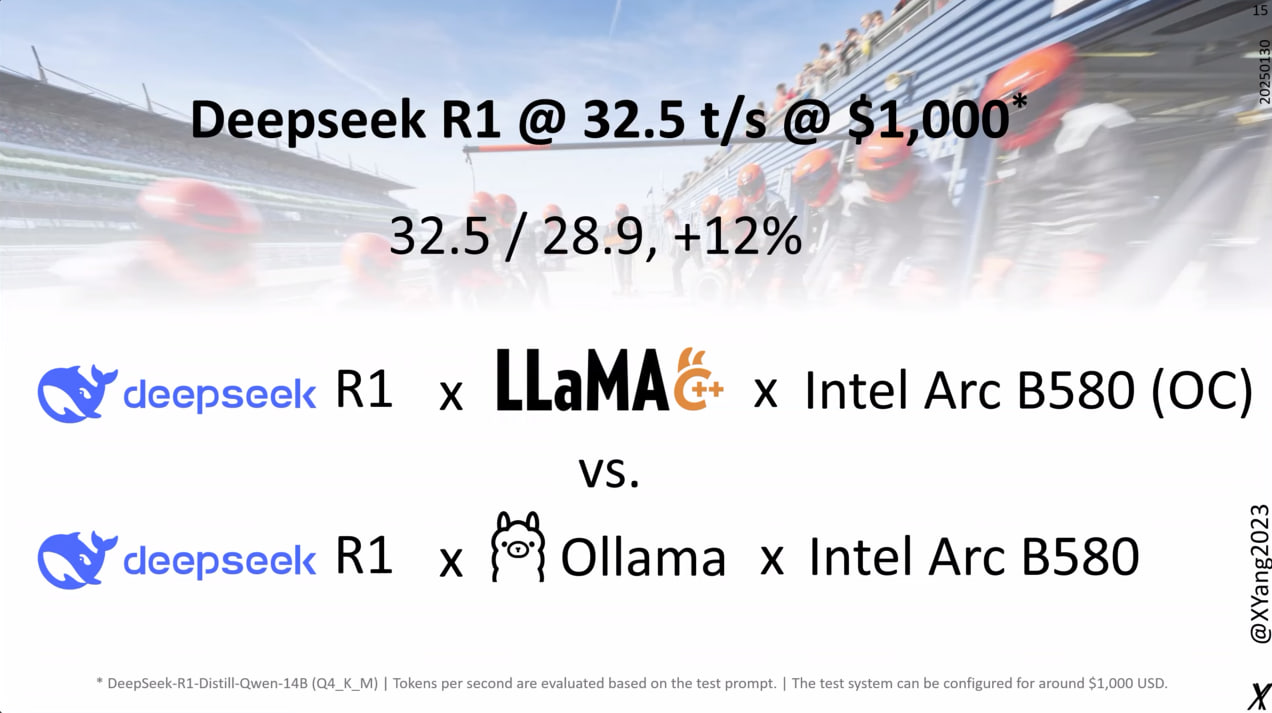

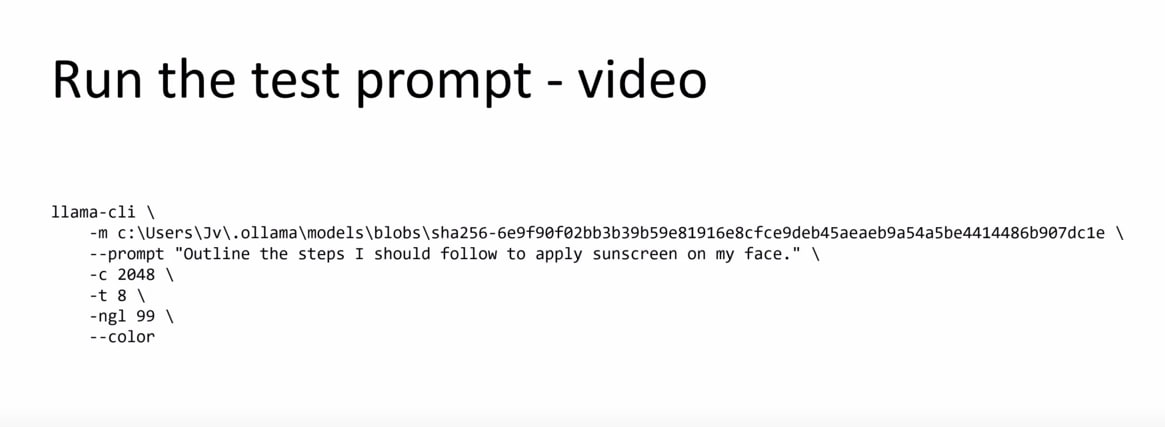

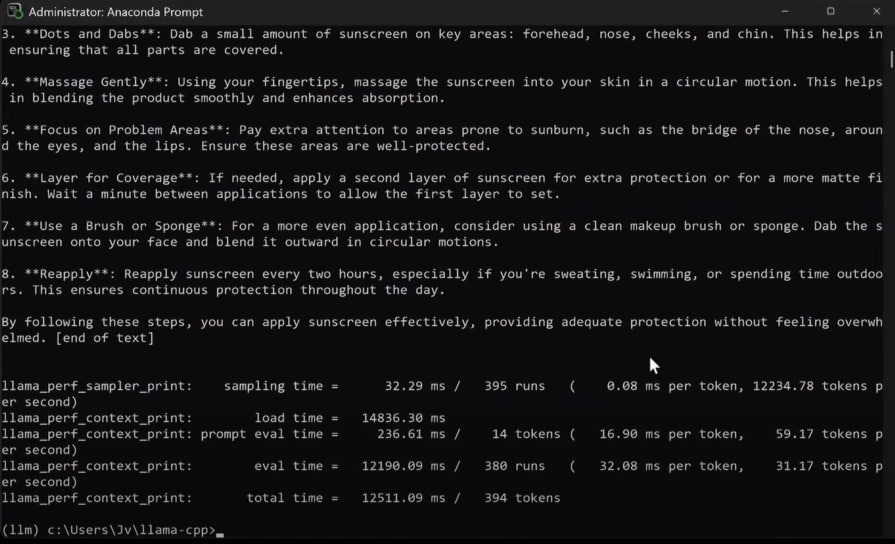

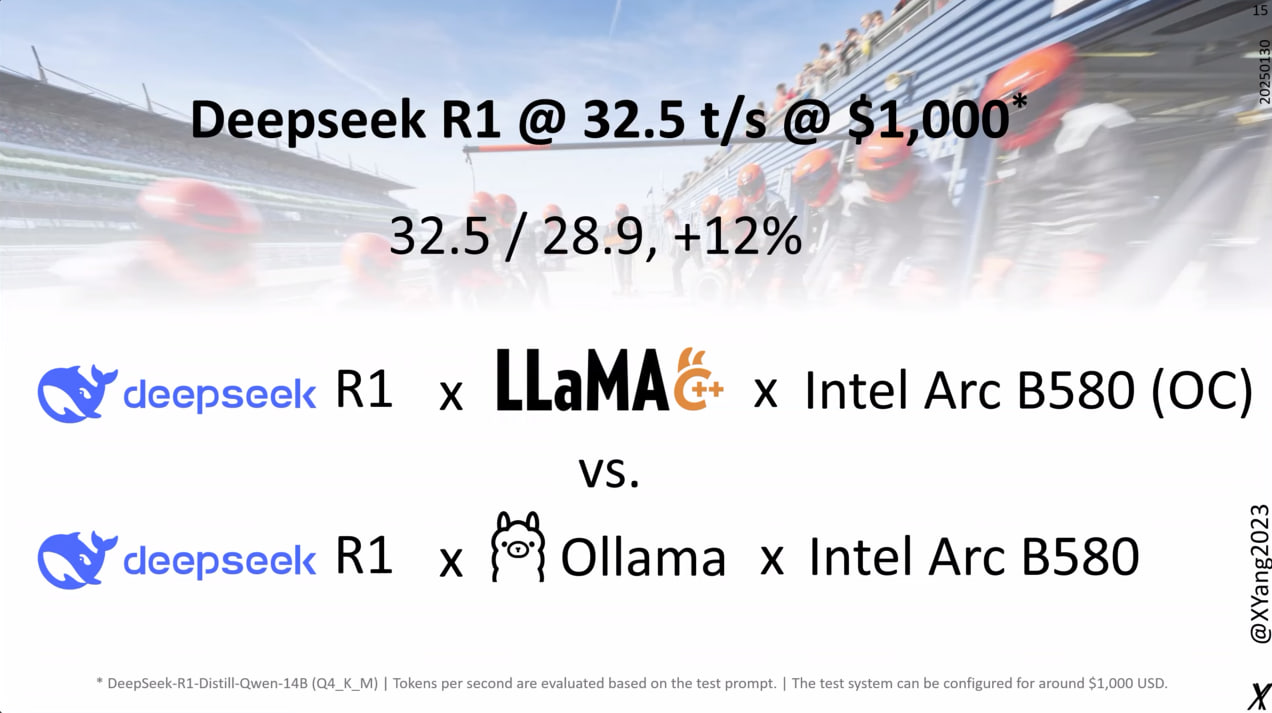

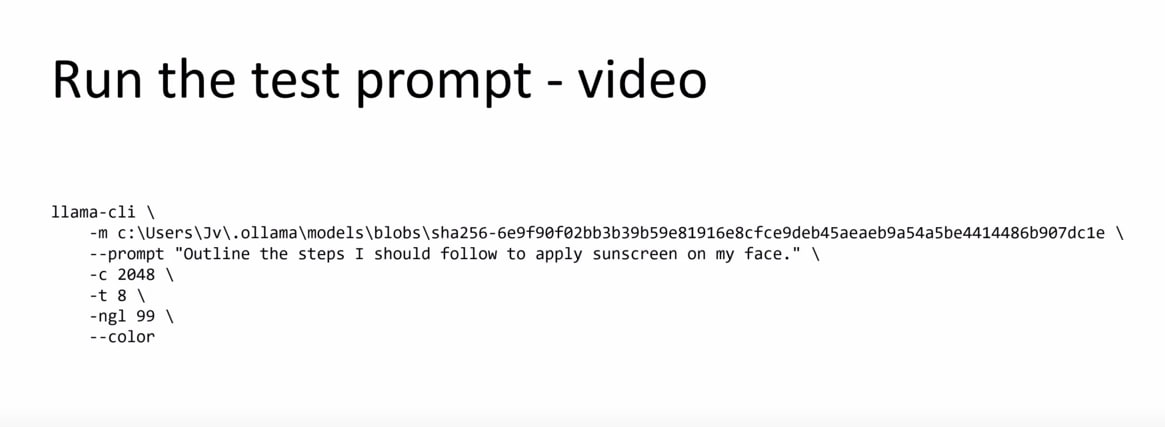

DeepSeek R1 on Intel Arc B580: More Performance with llama.cpp and Overclocking

youtu.be/-9hQdTqAIeE

youtu.be/-9hQdTqAIeE

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

Re: Benchmarks on AI models share your results! amd ,nvidia, Intel ,apple, NPU`s and cpu only

DeepSeek R1 671B MoE LLM running on Epyc 9374F and 384GB of RAM (llama.cpp, Q4_K_S, real time)

youtu.be/wKZHoGlllu4

youtu.be/wKZHoGlllu4

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

- david

- Site Admin

- Posts: 417

- Joined: Sat May 21, 2016 7:50 pm

Re: Benchmarks on AI models share your results! amd ,nvidia, Intel ,apple, NPU`s and cpu only

youtu.be/YxJYhhrhrDk

Run LLM on 5090 vs 3090 - how the 5090 performs running deepseek-r1 using Ollama?